Research Portfolio

Research Portfolio –>

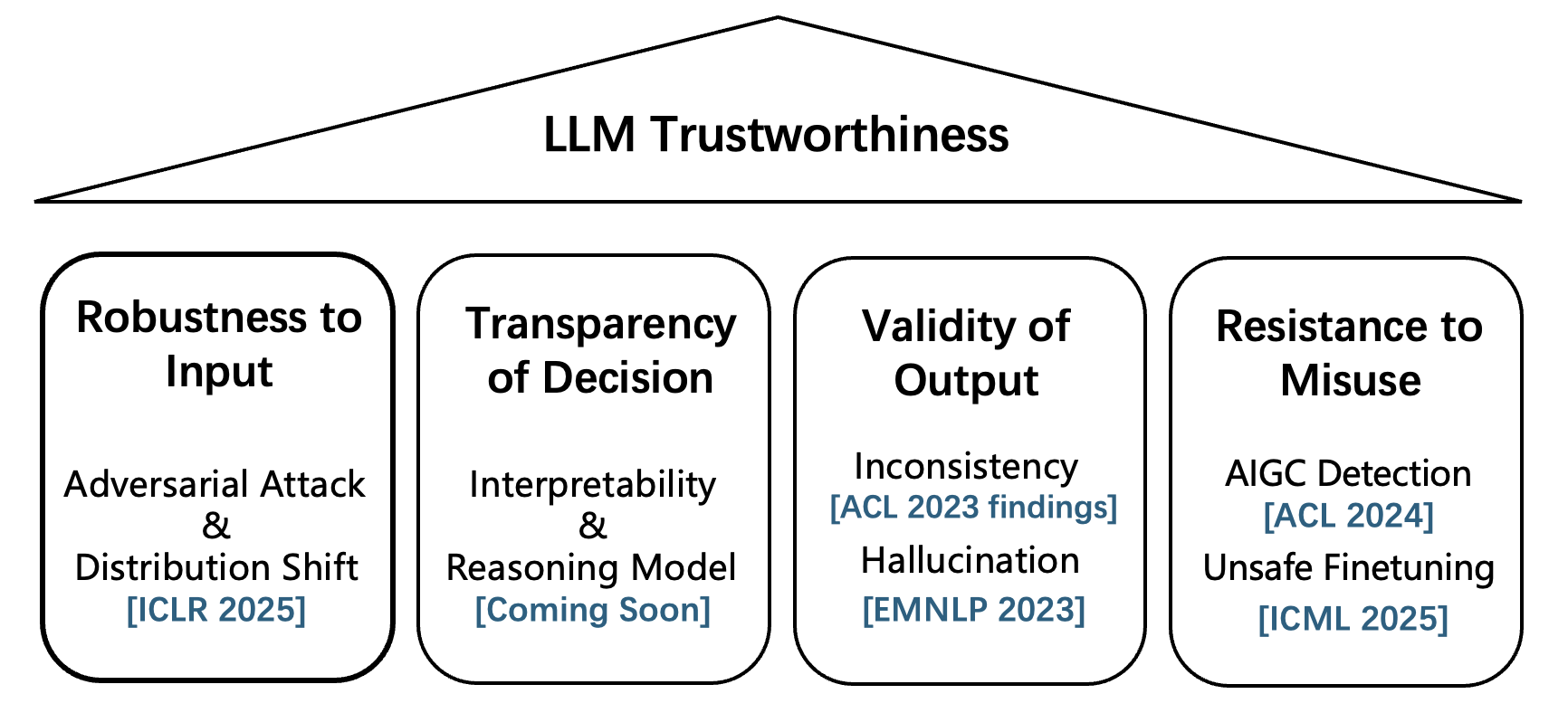

I’m dedicated to enhancing the reliability of LLMs across four dimensions:

- Robustness to Input: Ensuring LLMs can handle adversarial attacks and distribution shifts (ICLR 2025).

- Transparency of Decision: Improving interpretability techniques and reasoning models (BRIDGE).

- Validity of Output: Addressing hallucinations (EMNLP 2023) and inconsistencies in model outputs (ACL 2023 findings).

- Resistance to Misuse: Preventing the use of AI for cheating, plagiarism (ACL 2024), and unsafe fine-tuning (ICML 2025).